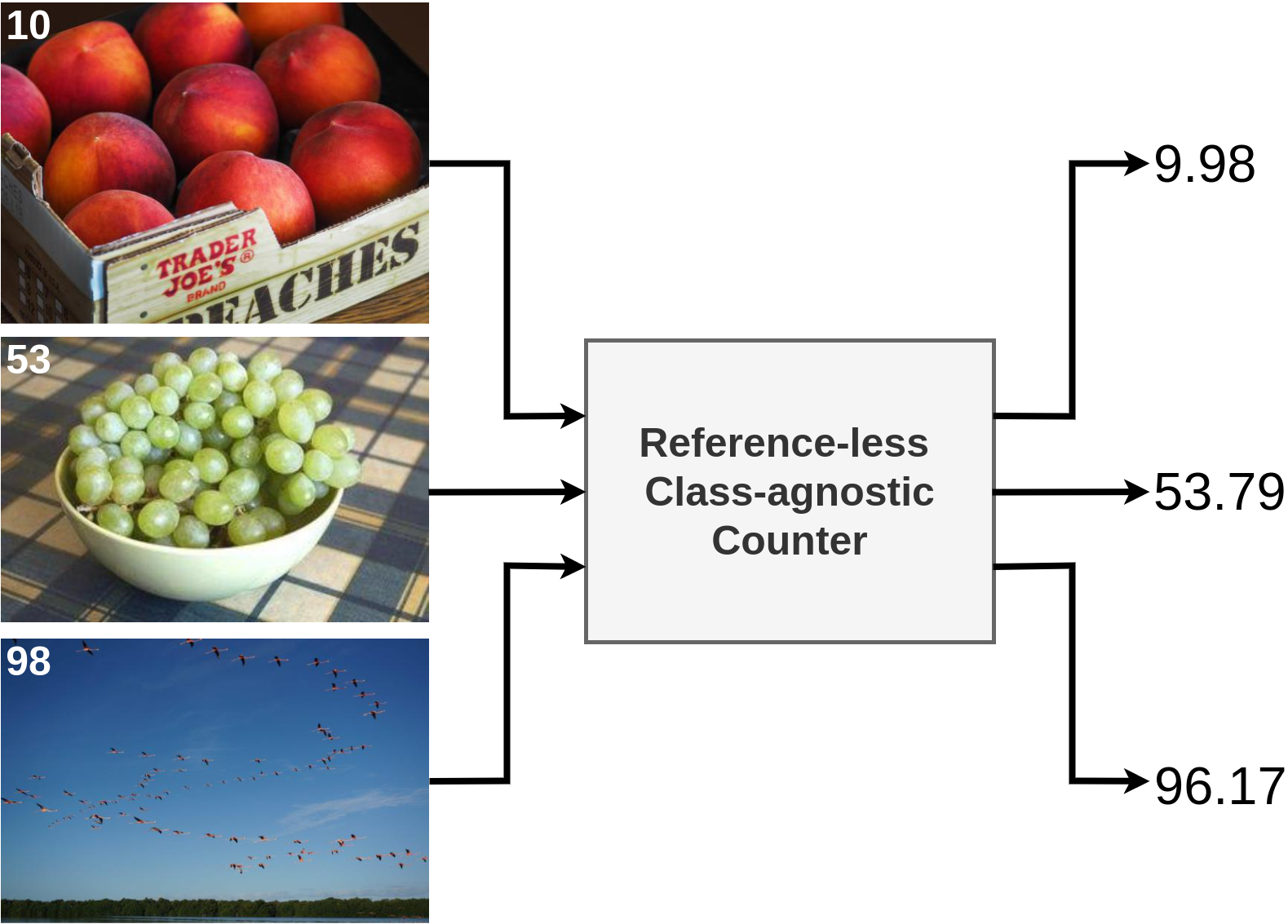

Learning to Count Anything: Reference-less Class-agnostic Counting with Weak Supervision

Abstract

Object counting is a seemingly simple task with diverse real-world applications. Most counting methods focus on counting instances of specific, known classes. While there are class-agnostic counting methods that can generalise to unseen classes, these methods require reference images to define the type of object to be counted, as well as instance annotations during training. We identify that counting is, at its core, a repetition-recognition task and show that a general feature space, with global context, is sufficient to enumerate instances in an image without a prior on the object type present. Specifically, we demonstrate that self-supervised vision transformer features combined with a lightweight count regression head achieve competitive results when compared to other class-agnostic counting tasks without the need for point-level supervision or reference images. Our method thus facilitates counting on a constantly changing set composition. To the best of our knowledge, we are both the first reference-less class-agnostic counting method as well as the first weakly-supervised class-agnostic counting method.

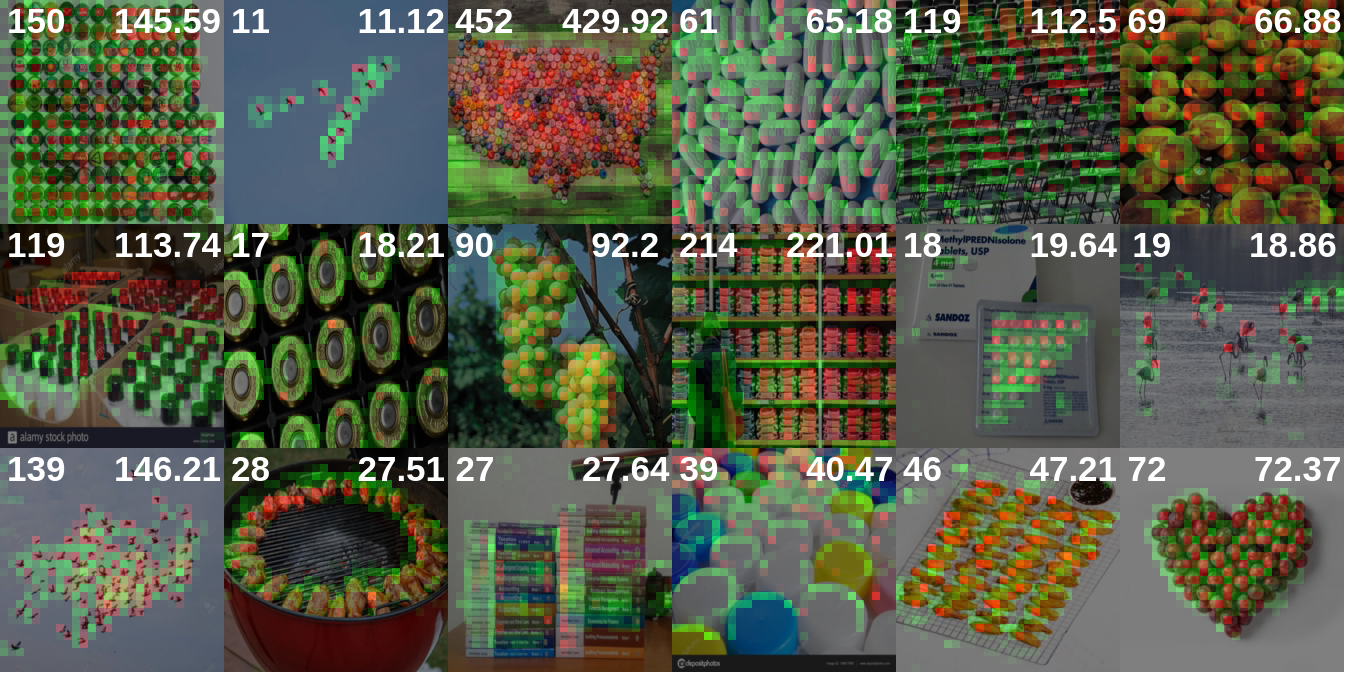

Visualisation of the Latent Counting Features

We overlay the two most significant feature channels (shown in red and green) of the penultimate layer of our counting head on images from FSC-147. The ground truth and predicted counts are in the top left and the top right of each image respectively

Acknowledgement

The authors would like to thank Henry Howard-Jenkins and Theo Costain for insightful discussions.

BibTeX

@article{hobley2022-LTCA,

title={Learning to Count Anything: Reference-less Class-agnostic Counting with Weak Supervision},

author={Hobley, Michael and Prisacariu, Victor},

journal = {Proceedings of the {IEEE} Conference on Computer Vision and Pattern Recognition ({CVPR})},

year={2023}}